FrictGAN

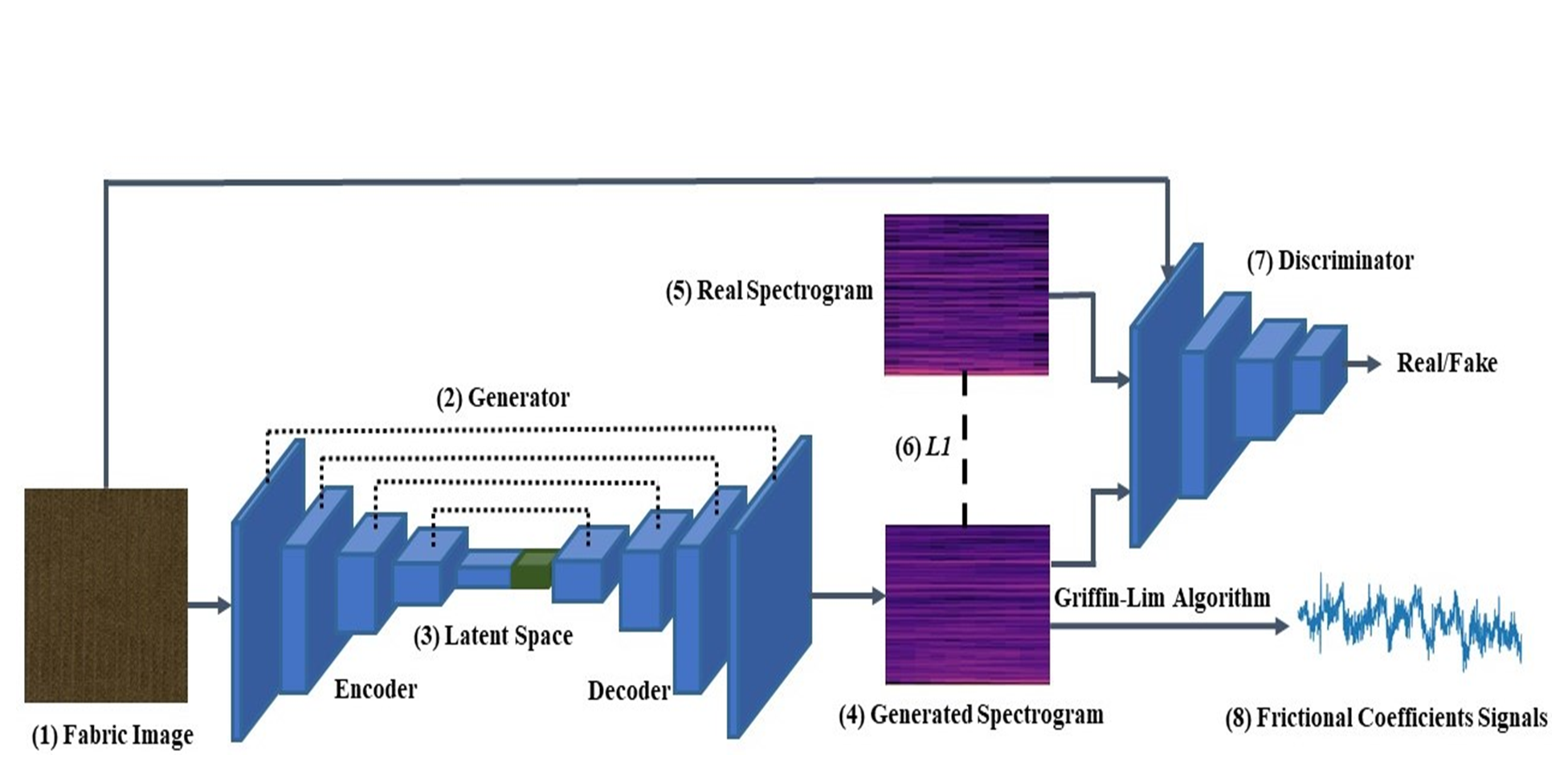

The electrostatic tactile display could render the tactile feeling of different haptic texture surfaces by generating the frictional force through voltage modulation when a finger is sliding on the display surface. However, it is challenging to prepare and fine-tune the appropriate frictional signals for haptic design and texture simulation. We present FrictGAN, a deep-learningbased framework to synthesize frictional signals for electrostatic tactile displays from fabric texture images. Leveraging GANs (Generative Adversarial Networks), FrictGAN could generate the displacement-series data of frictional coefficients for the electrostatic tactile display to simulate the tactile feedback of fabric material. Our preliminary experimental results showed that FrictGAN could achieve considerable performance on frictional signal generation based on the input images of fabric textures.

Best Papaer Audience Choice Award

Authors: Shaoyu Cai, Yuki Ban, Takuji Narumi, Kening Zhu

Source code and data set: https://github.com/shaoyuca/FrictGAN