Multi-modal Transformer-based Tactile Signal Generation

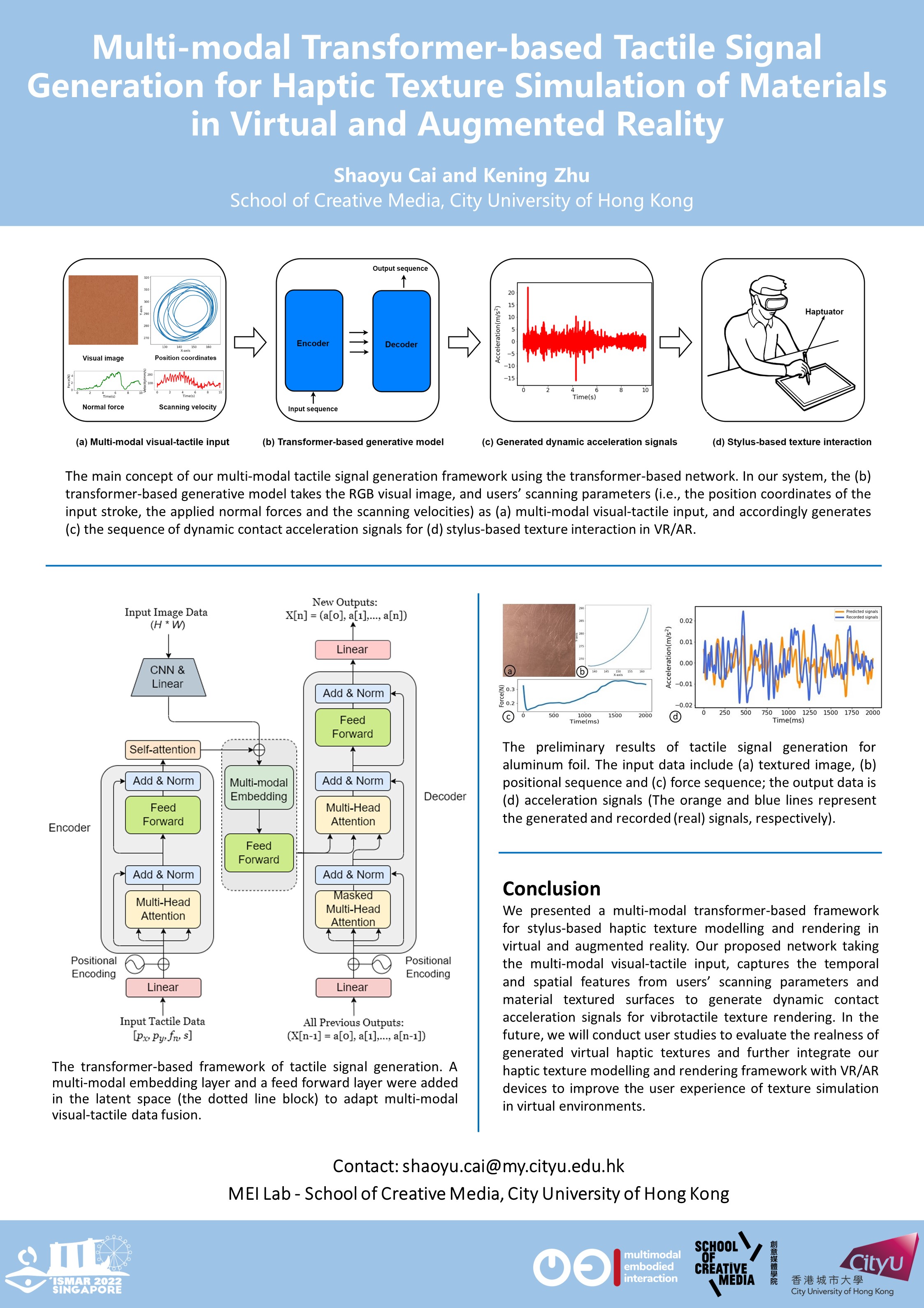

Current haptic devices can generate haptic texture sensations through replaying the recorded tactile signals, allowing for texture interaction of different materials in virtual reality (VR) and augmented reality (AR). As humans enable to feel different texture sensations under various scanning parameters (i.e., applied normal forces, scanning velocities and stroking directions/positions) on the material surface towards the same texture, such methods cannot support rendering natural haptic textures under various scanning parameters. To this end, we proposed a deep-learning-based approach for multi-modal tactile signal generation leveraging the framework of a transformer-based network. Our system takes the visual image of a material surface as the visual data and the acceleration signals with the scanning parameters induced by the pen-sliding movement on the surface as tactile data through a transformer-based generative model with the multi-modal feature embedding module for acceleration signals synthesis. We aim to synthesize dynamic acceleration signals based on the images of material surfaces and the users’ scanning states to create natural and realistic texture sensations in VR/AR.

Authors: Shaoyu Cai and Kening Zhu